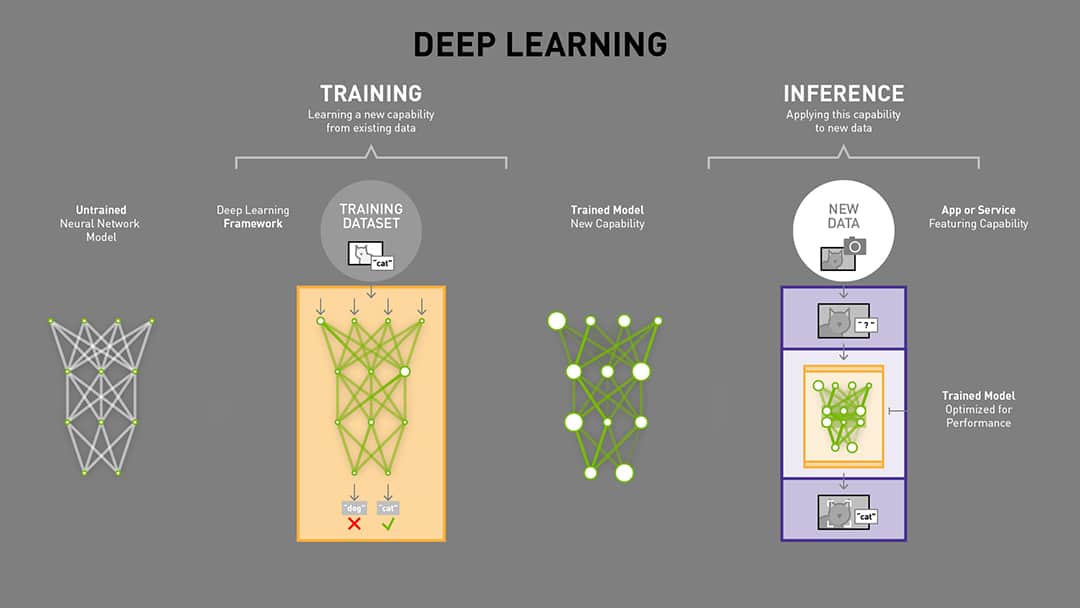

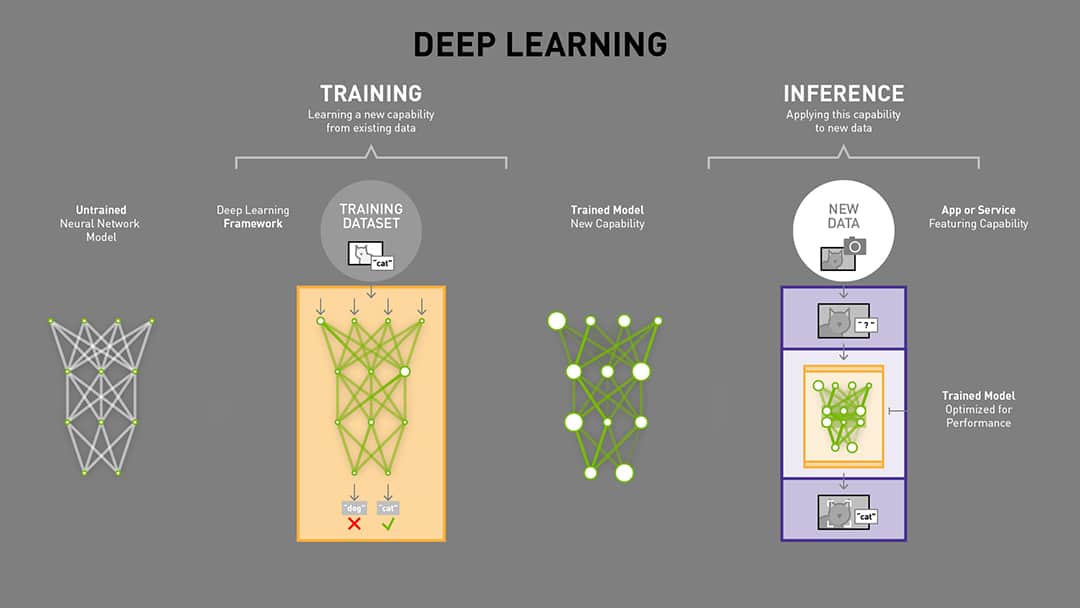

AI inference is the process of using a trained AI model to make predictions or decisions on new data. It is the final step in the AI development process, and it is what allows AI models to be used in real-world applications.

AI models are trained on large datasets of data that is labeled with the correct output. This data is used to teach the model how to identify patterns and relationships in the data. Once the model is trained, it can be used to make predictions on new data that it has never seen before.

AI inference is used in a wide range of applications, including:

- Image recognition: AI models can be used to identify objects and people in images. This is used in applications such as facial recognition, self-driving cars, and medical imaging.

- Natural language processing: AI models can be used to understand and generate human language. This is used in applications such as machine translation, chatbots, and voice assistants.

- Recommendation systems: AI models can be used to recommend products, movies, and other content to users. This is used in applications such as e-commerce websites, music streaming services, and social media platforms.

How Does AI Inference Work?

AI inference works by taking a new data point and feeding it into the trained AI model. The model then uses its knowledge of the training data to make a prediction or decision on the new data.

For example, an AI model that is trained to identify objects in images can be used to identify the objects in a new image. The model would first extract features from the image, such as the color, shape, and texture of the objects. The model would then use these features to compare the image to the training data and make a prediction about what the objects in the image are.

The speed and accuracy of AI inference depends on a number of factors, including the complexity of the AI model, the size of the training data, and the hardware that the inference is being performed on.

Advantages of AI Inference

AI inference offers a number of advantages over traditional methods of making predictions and decisions. These advantages include:

- Accuracy: AI models can be trained to make very accurate predictions and decisions. This is because they are able to learn complex patterns and relationships in the data.

- Speed: AI models can make predictions and decisions very quickly. This is important for applications such as self-driving cars and medical imaging, where decisions need to be made in real time.

- Scalability: AI models can be scaled to make predictions and decisions on large volumes of data. This is important for applications such as e-commerce websites and social media platforms, which need to process data from millions of users.

Challenges of AI Inference

While AI inference offers a number of advantages, there are also some challenges that need to be addressed. These challenges include:

- Explainability: It can be difficult to explain how AI models make predictions and decisions. This is because AI models are often complex and opaque.

- Fairness: AI models can be biased, which can lead to unfair outcomes. It is important to carefully select the training data and monitor the performance of AI models to avoid bias.

- Security: AI models can be vulnerable to adversarial attacks, which are attacks that are designed to fool the model into making incorrect predictions. It is important to implement security measures to protect AI models from adversarial attacks.

Use Cases of AI Inference

AI inference is used in a wide range of applications, including:

- Image recognition: AI models are used to identify objects and people in images. This is used in applications such as facial recognition, self-driving cars, and medical imaging.

- Natural language processing: AI models are used to understand and generate human language. This is used in applications such as machine translation, chatbots, and voice assistants.

- Recommendation systems: AI models are used to recommend products, movies, and other content to users. This is used in applications such as e-commerce websites, music streaming services, and social media platforms.

- Fraud detection: AI models are used to detect fraudulent transactions and other types of fraud. This is used in applications such as banking and insurance.

- Medical diagnosis: AI models are used to assist doctors with diagnosing diseases and recommending treatments. This is used in applications such as oncology and radiology.

The Future of AI Inference

AI inference is a rapidly developing field with a wide range of potential applications. As AI models become more complex and accurate, AI inference is expected to be used in even more applications.

One of the most promising areas of AI inference is in edge computing. Edge computing is a distributed computing paradigm that brings computation and data storage closer to the sources of data. This is important for AI inference applications that need to be performed

WebAI Inference refers to putting a trained model into production. Usually, this involves setting up a service that receives queries and sends back results according the. WebInferencing is the second phase of machine learning, following on from the initial training phase. During the training phase, the algorithm generates a new model or. WebMachine learning inference —involves putting the model to work on live data to produce an actionable output. During this phase, the inference system accepts inputs from end. WebInference is the process by which AI infers information from data. But before this can happen, AI must be trained with a dataset that has been processed for use in AI models..

What is AI Inference

Source: Youtube.com

Deep Learning Concepts: Training vs Inference

Source: Youtube.com

What Is Ai Inference, What is AI Inference, 8.35 MB, 06:05, 7,513, Arm®, 2021-07-27T14:25:32.000000Z, 2, What's the Difference Between Deep Learning Training and Inference? | NVIDIA Blog, 608 x 1080, jpg, , 3, what-is-ai-inference

What Is Ai Inference. WebThe goal of AI inference is to calculate and output an actionable result. Training and inference can be thought of as the difference between learning and putting what you learned into practice. During training, a deep learning model computes how the. WebAI Inference is achieved through an "inference engine" that applies logical rules to the knowledge base to evaluate and analyze new information. In the process of machine.

Learn more about what is AI inference at arm.com/glossary/ai-inference.

Learn more at about AI solution at arm.com/solutions/artificial-intelligence.

Artificial intelligence (AI) is as complex as it is fascinating. Two Arm experts, Dennis Laudick and Steve Roddy, discuss what is AI inference and how it turns AI training into actionable inference to augment human decision-making from the cloud to the edge and to endpoints.

#AIInference #ArtificialIntelligenceInference #AI #ArtificialIntelligence #ArmWhatIsSeries #Arm

00:00 What is AI Inference?

01:55 How to implement AI Inference?

04:48 AI Inference Future

Stay connected with Arm:

Website: arm.com/

Twitter: twitter.com/arm

Facebook: facebook.com/Arm/

LinkedIn: linkedin.com/company/arm

Instagram: instagram.com/arm/

What Is Ai Inference, WebMachine learning inference —involves putting the model to work on live data to produce an actionable output. During this phase, the inference system accepts inputs from end. WebInference is the process by which AI infers information from data. But before this can happen, AI must be trained with a dataset that has been processed for use in AI models..

What's the Difference Between Deep Learning Training and Inference? | NVIDIA Blog - Source: blogs.nvidia.com

AI Inference: Applying Deep Neural Network Training – MITXPC - Source: mitxpc.com

Deep Learning Training vs. Inference: What's the Difference? - Source: xilinx.com

What is ai model inference

What is ai model inference Ads related to: What Is Ai Inference What is an ai inference engine.

What is an ai inference engine

What is an ai inference engine What is ai inference server.

What is ai inference server

What is ai inference server What is ai inference vs training.

AI and ML Workloads - HPE and NVIDIA AI Solutions

Power a Wide Range of AI, ML and GPU-Enabled Apps within the Data Centre and at the Edge. NVIDIA & HPE ProLiant Featuring Intel Xeon Scalable Processor: Power Your AI Initiatives. .

What is ai inference vs training

What is ai inference vs training What is ai inference.

Demo ServiceNow® Generative AI - Generate Content Automatically

Put AI to work to swiftly resolve issues with chatbots that comprehend human language. Enhance productivity and delivery times with intelligent recommendations. Watch demos! .

What is ai inference

What is ai inference What is ai inference vs training.

What is inference in artificial intelligence research.ibm.com › blog › AI-inference-explainedWhat is AI inferencing? | IBM Research Blog

What is inference in artificial intelligence Inference is the process of running live data through a trained AI model to make a prediction or solve a task. Inference is an AI model's moment of truth, a test of how well it can apply information learned during training to make a prediction or solve a task. What is inference in generative ai.

blog.innodisk.com › what-inference-means-inWhat Inference Means in Artificial Intelligence (And Why You ...

Inference is the ability of AI applications to draw conclusions from data without uploading it to the cloud. Learn how to use inference in edge devices with sensors, hardware, and trained models. Find out why you should use inference and how to get started with it. What is inference in artificial intelligence.

www.arm.com › glossary › ai-inferenceWhat is AI Inference – Arm®

AI Inference is the process of using machine learning to apply logical rules to new information and evaluate it. Learn how Arm offers solutions for AI inference at the edge, cloud and endpoint with different computing architectures. .www.allaboutai.com › ai-glossary › inference-aiWhat is Inference? - All About AI

Inference refers to the process where a trained AI model applies learned knowledge to new and unseen data to make decisions or predictions. In the world of Artificial Intelligence (AI), Inference represents a pivotal concept, especially in understanding how AI systems apply learned information to make decisions or predictions. .

Ads related to: What Is Ai Inference .

www.datacamp.com › blog › what-is-machine-learningWhat is Machine Learning Inference? An Introduction to ...

.

.

blogs.nvidia.com › blog › difference-deep-learningWhat's the Difference Between Deep Learning Training and ...

In the AI lexicon this is known as "inference." Inference is where capabilities learned during deep learning training are put to work. Inference can't happen without training. Makes sense. That's how we gain and use our own knowledge for the most part. .

What is inference in generative ai www.cloudflare.com › learning › aiAI inference vs. training: What is AI inference? | Cloudflare

What is inference in generative ai AI inference is when an AI model produces predictions or conclusions from new data. Learn how AI inference differs from AI training, and how Cloudflare enables developers to run AI inference at the edge. What is inference rule in ai.

What is inference rule in ai

What is inference rule in ai What is ai edge inference.

What is ai edge inference Demo ServiceNow® Generative AI - Generate Content Automatically

What is ai edge inference Put AI to work to swiftly resolve issues with chatbots that comprehend human language. Enhance productivity and delivery times with intelligent recommendations. Watch demos! What is gen ai inference.

What is gen ai inference AI and ML Workloads - HPE and NVIDIA AI SolutionsMake AI WorkPlateforme Edge to CloudAI SolutionsHPE ProLiant Gen11Accelerate AIAI Infographic

What is gen ai inference Power a Wide Range of AI, ML and GPU-Enabled Apps within the Data Centre and at the Edge. NVIDIA & HPE ProLiant Featuring Intel Xeon Scalable Processor: Power Your AI Initiatives.Learn How To Make AI Work For Yourbusiness.View HPE GreenLake's-PlateformeEdge to CloudUnlock Your Data With Simplepipelines. Insights Into ActionOptimized For AI Workloads desc.See How HPE ProLiant Can Help,Learn More About The HPESupercomputing today!Learn About the Benefits of AI andHow it Can Help Your Business. What is ai model inference.

Post a Comment